Yet another ORM?

When coming across DataJoint, Python programmers often ask, “What is the difference between DataJoint and other ORMs such as SQLAlchemy or Django ORM?”

ORMs (object-relational mappers) are libraries that allow defining and manipulating data in relational databases using native constructs of the host language (e.g. Java, Python). Very commonly, ORMs represent tables as classes. Python already has several established ORMs such as SQLAlchemy, the Django ORM, Pony, and Peewee. Traditionally, ORMs are designed to provide a persistence layer for objects in applications.

In some respects, DataJoint may be classified as another ORM: it represents tables in the relational database as classes in Python and MATLAB. However, DataJoint is dedicated to do one job exceptionally well: to build data pipelines for science projects. It is centered on the concept of a workflow from data entry to data acquisition to processing and analysis. Data dependencies and data integrity are carefully maintained at each step by means of referential constraints and transactional processing. Complex data types such as multidimensional arrays are transparently serialized.

Compared to other ORMs, DataJoint is more data-centric: the structure and integrity of the data are of primary concern. This may be contrasted by other ORMs where the data storage is a secondary consideration in the overall application-centric design.

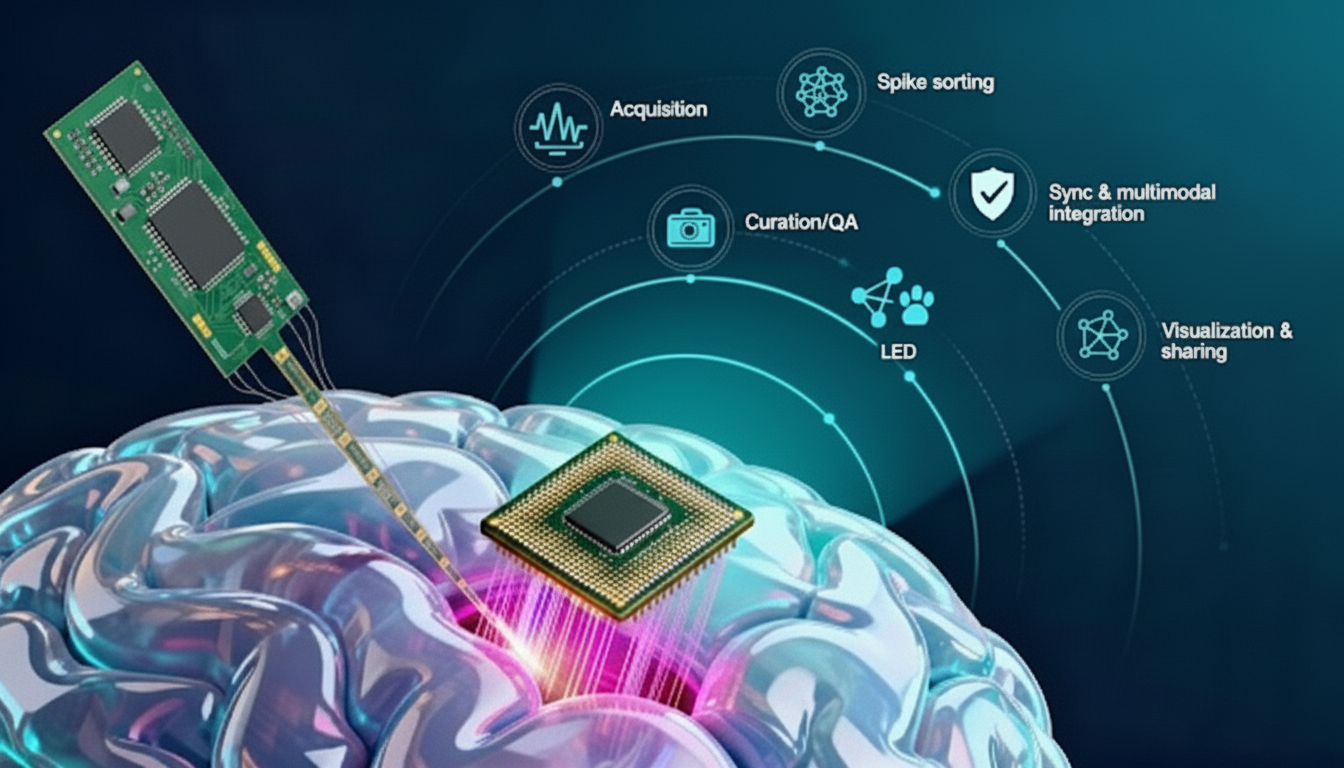

DataJoint is designed to ingrate data and computation. Modern neuroscience experiments involve many steps of processing, synchronization, and filtering of acquired data followed by many kinds of statistical analysis. DataJoint provides a streamlined AutoPopulate process whereby each DataJoint class defines both the structure of the data and the code for computing the data. Once the automatic computation is defined, DataJoint allows to automatically compute any missing data. A built-in job reservation process allows distributing the work to an arbitrary number of computing nodes.

Finally, DataJoint is designed for simplicity and quick learning. It is based on a minimal set of concepts sufficient to define, populate, compute, and query complex data pipelines.

Related posts

Neuropixels, Plainly Explained

AI and the Evolution of Relational Schemas

Insight Entrepreneurship – A New Vision for Science

Updates Delivered *Straight to Your Inbox*

Join the mailing list for industry insights, company news, and product updates delivered monthly.

.svg)