The Great Data Debate

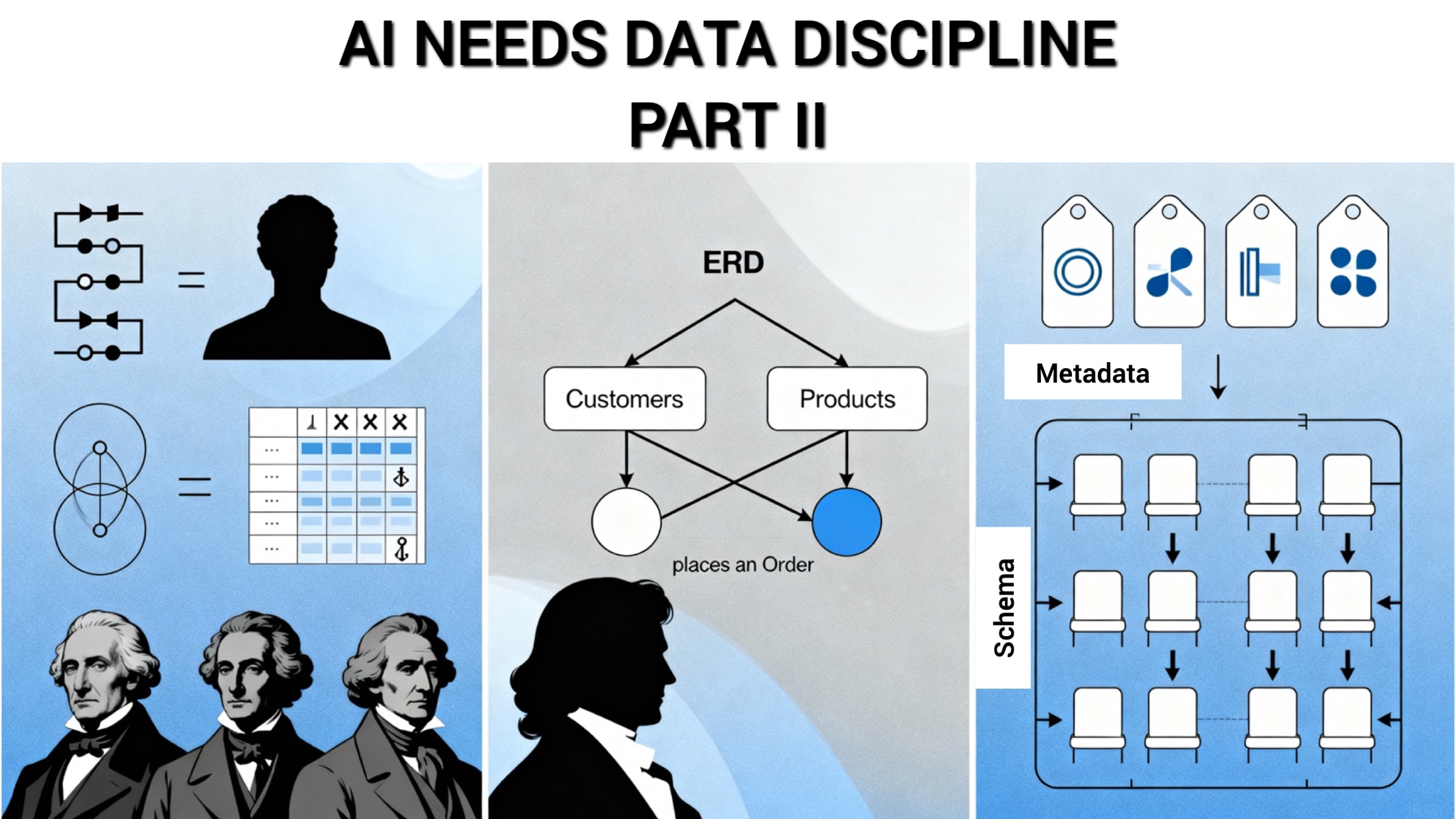

This article is Part 1 of our three-part series, AI Needs Data Discipline. In this opening installment, we explore the enduring debate between schema-on-write and schema-on-read, and how modern systems blend the two in data lakes, warehouses, and lakehouses. In Part 2, we’ll trace the mathematical foundations of structure and why they matter for AI.

The Great Data Debate

The Enduring Tension: Schema-on-Write vs. Schema-on-Read

Long before AI became a ubiquitous topic, the data management world was already grappling with a fundamental question: when and how should we define the structure of our data? This debate centers on two primary philosophies: "schema-on-write" and "schema-on-read."

The traditional schema-on-write approach, familiar to anyone who has worked with relational databases, demands that we define the data's blueprint—its fields, data types, and relationships—before any data is stored. Imagine meticulously designing an architectural plan before laying a single brick. This ensures consistency and predictability, as every piece of data has a designated place. However, this upfront design effort can make it challenging and costly to adapt if data formats need to change rapidly.

Conversely, schema-on-read postpones defining structure until the data is actually queried. This approach gained significant traction with the NoSQL movement in the 2010s, driven by the need to handle the massive, diverse datasets ("Big Data") generated by web applications. Schema-on-read allows for the quick ingestion of varied data types, offering great agility. Think of this as gathering all your building materials on-site and figuring out the assembly each time you need a structure. While flexible, this can sometimes lead to inconsistencies, as the interpretation of structure happens at the point of use.

The choice often reflects a project's ambition: unstructured approaches might let you build many simple cabins quickly, but only a planned, structured approach can yield a Burj Khalifa.

The Hybrid Middle Ground: Data Lakes, Warehouses, and Lakehouses

In today's complex data landscape, many organizations don't rigidly stick to one extreme. Instead, a hybrid approach is common. Raw data in its myriad formats (structured, semi-structured, unstructured) is often rapidly ingested into a data lake, a schema-on-read staging area. This "capture everything" strategy is great for speed and flexibility.

However, when it comes to reliable analytics, reporting, and especially AI applications that demand high data quality, a transformation often occurs. Selected data from the lake is cleaned, validated, and loaded into a structured, schema-on-write system like a data warehouse.

The more recent concept of the data lakehouse attempts to merge the best of both worlds. It aims to provide the flexible storage of a data lake with the robust data management, governance, and query performance features of a data warehouse, all within a single platform. Even in these advanced hybrid systems, a crucial principle often holds: while initial ingestion might be flexible (leaning towards schema-on-read), a subsequent step typically applies structure (schema-on-write) to ensure integrity for critical downstream applications, including AI.

Why did the structured approach gain prominence in the first place? Part 2 of AI Needs Data Discipline, "The Power of Schemas," describes the historical and theoretical foundations that make structured data so powerful and why it’s more than just a preference for order.

Related posts

The Power of Schemas

Restoring Gold Standard Science

A New Course for Scientific Discovery

Updates Delivered *Straight to Your Inbox*

Join the mailing list for industry insights, company news, and product updates delivered monthly.

.svg)